Facebook Rolls Out AI To Detect Suicidal Posts: Before They Are Reported

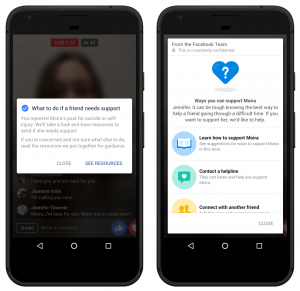

A software to save lives. Facebook rolls out AI to detect suicidal posts. The new “proactive detection” artificial intelligence technology will scan all posts for patterns of suicidal thoughts, and when necessary send mental health resources to the user at risk or their friends, or contact local first-responders. By using AI to flag worrisome posts to human moderators instead of waiting for user reports, Facebook can decrease how long it takes to send help.

Facebook previously tested using AI to detect troubling posts and more prominently surface suicide reporting options to friends in the U.S. Now Facebook is will scour all types of content around the world with this AI, except in the European Union, where General Data Protection Regulation privacy laws on profiling users based on sensitive information complicate the use of this tech.

Facebook previously tested using AI to detect troubling posts and more prominently surface suicide reporting options to friends in the U.S. Now Facebook is will scour all types of content around the world with this AI, except in the European Union, where General Data Protection Regulation privacy laws on profiling users based on sensitive information complicate the use of this tech.

How suicide reporting works on Facebook now

Through the combination of AI, human moderators and crowdsourced reports, Facebook could try to prevent tragedies like when a father killed himself on Facebook Live last month. Live broadcasts in particular have the power to wrongly glorify suicide, hence the necessary new precautions, and also to affect a large audience, as everyone sees the content simultaneously unlike recorded Facebook videos that can be flagged and brought down before they’re viewed by many people.

Now, if someone is expressing thoughts of suicide in any type of Facebook post, Facebook’s AI will both proactively detect it and flag it to prevention-trained human moderators, and make reporting options for viewers more accessible.

Now, if someone is expressing thoughts of suicide in any type of Facebook post, Facebook’s AI will both proactively detect it and flag it to prevention-trained human moderators, and make reporting options for viewers more accessible.

Read Also: Google & Facebook Join The Trust Project For Fake News Organisations

When a report comes in, Facebook’s tech can highlight the part of the post or video that matches suicide-risk patterns or that’s receiving concerned comments. That avoids moderators having to skim through a whole video themselves. AI prioritizes users reports as more urgent than other types of content-policy violations, like depicting violence or nudity. Facebook says that these accelerated reports get escalated to local authorities twice as fast as unaccelerated reports.

Back in February, Facebook CEO Mark Zuckerberg wrote that “There have been terribly tragic events — like suicides, some live streamed — that perhaps could have been prevented if someone had realized what was happening and reported them sooner. Artificial intelligence can help provide a better approach.”

Back in February, Facebook CEO Mark Zuckerberg wrote that “There have been terribly tragic events — like suicides, some live streamed — that perhaps could have been prevented if someone had realized what was happening and reported them sooner. Artificial intelligence can help provide a better approach.”

Get The Latest Tech News Updates Follow us on Facebook, Twitter, Google+, & Linkedin